Object tracking is a core computer vision technique that enables AI systems to detect, follow, and understand how objects move through space and time. This overview explains what object tracking is, how it works, and why high-quality, well-annotated training data is essential for building reliable models used in automation, analytics, and real-world perception systems.

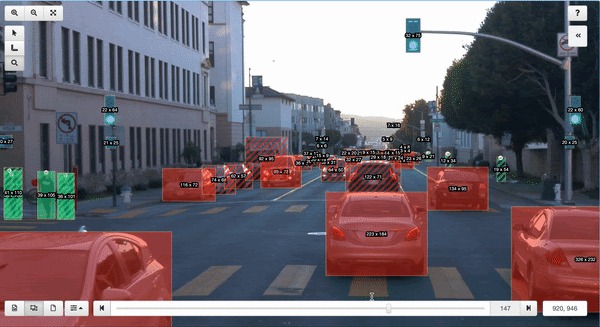

Self-driving cars, security systems, retail analytics, and traffic management are just a few examples of how organizations use computer vision tracking to analyze environments and monitor objects.

This process goes beyond simple image recognition. Tracking allows AI systems to follow objects across multiple frames, creating a temporal link that reveals how things move and interact. By maintaining consistent identities from frame to frame, object tracking provides the foundation for real-time analytics, automation, and situational awareness.

In this guide, you’ll explore:

Keep reading to learn how object tracking works, the key algorithms behind it, and why consistent, high-quality training data, image annotation, and video annotation are essential for accurate results.

Here’s a basic object tracking definition. It’s a computer vision application that detects objects and follows them, tracking movement across images or videos.

As objects are detected, they are assigned a unique identity. This step allows algorithms to understand where things are and how they move. That’s important to track the trajectory and behavior continuously.

In short, object detection identifies objects within a given frame. For example, locating people or vehicles within a frame. Object tracking then follows these unique IDs to create a continuous track.

You see this process used for things like:

There are typically two main applications:

For instance, image-based tracking might guide a robotic arm to place an object, while video-based tracking helps a self-driving car monitor pedestrians over time. Both use cases rely on consistent object identities across time (what we call persistent ID tracking) to follow objects.

Many developers prototype these systems using OpenCV tracking modules, which offer built-in algorithms for following objects across frames.

Each step is critical and requires high-quality data for training and validation. If detection accuracy is off or IDs are mismatched, the system can lose track of objects or fail to maintain consistent identities. You might see identity switches or interrupted trajectories as a result. Robust trackers are designed to handle occlusions, motion blur, scale changes, and background clutter, even when visibility is reduced.

It all starts, however, with accurate and consistent data annotation support to ensure reliable model performance and identity tracking.

Next: Let’s look at the key algorithmic components that make these tracking systems work effectively.

In computer vision, object tracking relies on three main algorithmic components: motion prediction, appearance matching, and data association. Each plays a crucial role in maintaining consistent object identities across frames, even when objects move unpredictably or become partially obscured. These models and processes vary in complexity, but conceptually they perform three main functions that together balance accuracy, efficiency, and reliability across the tracking pipeline.

Trackers estimate where an object is likely to move next, narrowing the search area for subsequent frames. The Kalman filter, for instance, predicts an object’s next position based on its prior motion. This approach balances accuracy and efficiency, allowing the system to stay stable even when visibility is reduced by occlusion or noise.

When objects overlap, leave the frame, or reappear, appearance matching helps the model recognize the same object again. It compares visual characteristics like color and shape, or deep neural network embeddings, to re-identify objects.

However, models such as DeepSORT have limitations. They can re-link detections after short occlusions but may struggle with prolonged occlusions, leading to ID drift where the system mistakenly assigns a new ID to a previously tracked object. In practice, this is a common issue that often requires human reviewers to “fix tracks” in datasets used for training or validation.

Once predictions and appearance features are computed, data association tracking links detections to existing tracks. This step matches new detections with the most similar existing objects based on both spatial proximity and visual similarity. Algorithms like DeepSORT or ByteTrack rely on efficient similarity searches within learned feature spaces to make these associations reliably.

Together, these components enable trackers to maintain persistent identities—even when objects move unpredictably, overlap, or temporarily disappear from view.

Next: Let’s explore how these techniques differ between single-object and multiple-object tracking systems.

Object tracking problems are typically grouped into one of two categories: Single Object Tracking (SOT) and Multiple Object Tracking (MOT).

In SOT, the system tracks a single target initialized in the first frame and predicts its position across frames. It then continuously predicts its position across frames. You’ll see this in applications like AR or VFX, where you’re tracking a single subject.

Because the model only tracks one target, accuracy can be high, but it requires clean initialization and continuous visibility to maintain reliable tracking.

When you need to detect and track multiple objects simultaneously, it gets much more complex when everything’s in motion. Each object needs a unique ID and 3D annotation, and must be tracked across frames. You see this in use cases such as video surveillance, self-driving vehicles, or traffic flow monitoring, where multiple objects move and interact.

MOT requires strong data association logic to prevent ID switches and maintain identity consistency across objects that may move similarly or overlap.

When comparing SOT vs MOT, both require high-quality, annotated datasets that include accurate bounding boxes and frame-level labeling to ensure each object’s ID remains consistent.

Next: Let’s examine some of the most common challenges that can disrupt object tracking performance in real-world environments.

Even advanced systems struggle with the variability of real-world conditions. Variations in lighting, movement, and environment can cause algorithms to lose track of objects or assign incorrect identities.

Below are some of the most frequent challenges in computer vision tracking and how they impact accuracy:

Addressing these challenges requires smarter training data strategies, diverse testing environments, and rigorous validation to ensure reliable model performance across conditions.

Next: Let’s bring it all together by looking at how data quality underpins every stage of effective object tracking.

Computer vision tracking lets you understand the world dynamically. Instead of analyzing each frame, tracking builds a temporal continuity that powers your advanced analytics and automation. The value is great, but it all depends on a crucial factor: data quality.

High-performing models require precise training data annotation, high-quality video and images, consistent 3D annotation, and meticulous frame-level labeling. Without well-annotated training data as a foundational component, even the best tracking algorithms will struggle to maintain identity accuracy.

If your organization is developing AI models that depend on reliable object tracking, you need data that performs as well as your algorithms. Sama provides data annotation support to help ensure tracking accuracy across complex, real-world environments.

Ready to strengthen your object tracking pipeline? Talk to the experts at Sama about a customized solution.