Instance segmentation is one of the most precise and demanding techniques in computer vision, enabling models to identify and separate individual objects at the pixel level. This guide explains how instance segmentation works, how it differs from other segmentation methods, which models are most commonly used, and where it delivers the most value in real world, high stakes applications.

![Instance Segmentation in Computer Vision [Models, Techniques & Applications]](https://cdn.prod.website-files.com/65855f99c571eeb322e2b933/694700c7246c50d754d726fa_instance-segmentation-3%20(1).png)

Computer vision capabilities have advanced to the point that they are being asked to perform tasks that humans cannot perform reliably at scale.

With jobs like manufacturing quality control, machines are tasked with counting thousands of products per hour while accurately flagging defective units. This role is well-suited to a model trained on instance segmentation.

Instance segmentation requires exact detail in terms of data preparation. Achieving this precision depends on detailed, pixel-accurate training data, which often requires annotators to carefully outline complex object shapes across large datasets.

In this guide, we break down what instance segmentation is, how it differs from other segmentation methods, how popular models work, and where these techniques are applied across real-world industries.

.webp)

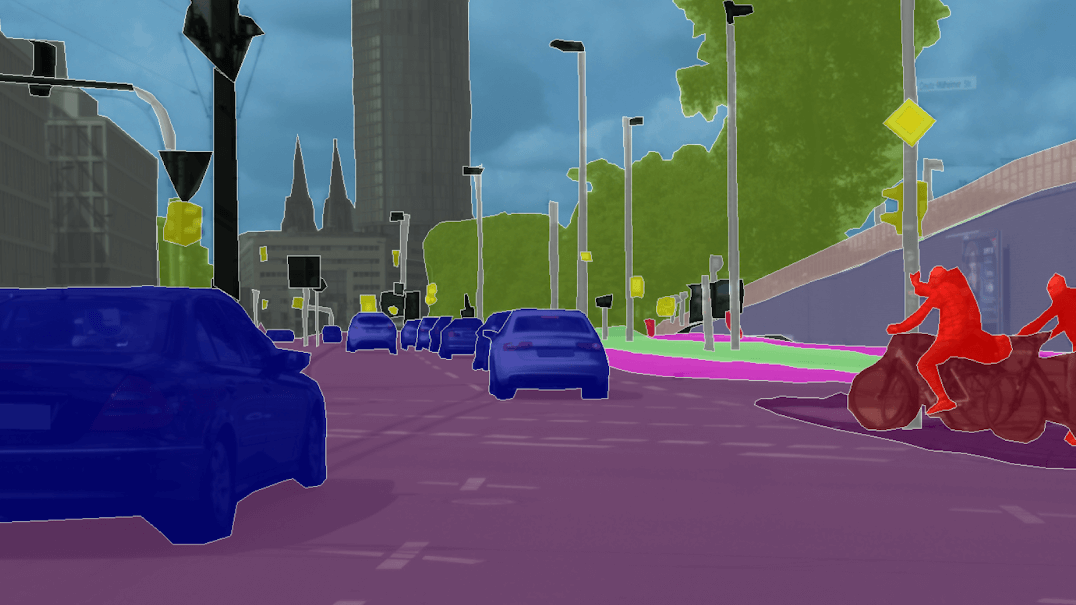

Instance segmentation is a computer vision technique that identifies each individual object in an image and generates a pixel-level mask outlining its exact shape.

Looking at the output of an instance segmentation model, you’ll usually see the contour of the target object highlighted in what’s known as a pixel-level mask. Instance segmentation goes beyond semantic segmentation by assigning individual identifiers to each target object in an image.

In simpler forms of computer vision, you’ll often see objects identified with just their approximate location. This is usually referred to as a bounding box, and it works best when the target object looks the same from most angles. Think of something like an apple or a baseball.

Instance segmentation is important in applications where precision is imperative for human health and safety. Imagine a robot designed to remove a tumor, for example. Identifying surgical landmarks with a bounding box would only provide an approximate location, which isn’t exact enough for safety purposes. A pixel-precise mask would provide a much more comfortable margin of safety.

To support these highly precise use cases, teams often rely on expert-level data annotation support.

Instance segmentation differs from semantic segmentation, panoptic segmentation, and object detection in both the type of output it produces and the level of detail it provides. Other forms of segmentation offer different features and require different preparation. The comparison table below outlines how these methods vary by output, granularity, and ideal use cases.

Except for panoptic segmentation, instance segmentation is the most resource-intensive form of segmentation for image recognition models. That’s why many AI teams are building on this technique to increase its efficiency without sacrificing accuracy.

Instance segmentation models generally fall into three architectural categories: two-stage detectors, single-stage networks, and emerging transformer-based models.

Mask R-CNN (short for region-based convolutional neural network) is an instance segmentation and model algorithm that works via a two-stage architecture.

The first stage is what’s called a Region Proposal Network (RPN). This starts by drawing a bounding box around a target object. After the bounding box is drawn, a component called ROIAlign (where ROI stands for Region of Interest) uses the information within the box to extract key features. These are used to generate segmentation masks.

While Mask R-CNN can be very accurate, it requires significant training compute and typically runs slower than real-time. This makes it better suited for quality-critical applications than latency-sensitive ones. It may also struggle with very small objects when limited pixel information is available.

Instead of first detecting a region and then creating a mask, single-stage instance segmentation models like YOLACT and SOLOv2 conduct detection and segmentation in parallel. This results in faster performance.

Both approaches enable image segmentation models to run in real time. This makes them useful for applications such as autonomy or quality control, which require speed.

In the near future, transformer-based approaches may augment or replace both two-stage and single-stage models. Instead of establishing bounding boxes or dividing an image into a grid, transformer-based image segmentation models can process the entire image at once.

Transformer models use an “attention mechanism.” They identify the most relevant points in an image in order to extrapolate what the rest of the mask should look like. For example, if you look at an image of a bicycle and identify its wheels and pedals, you should have a good idea of where the seat and handlebars should go.

These models are currently outperforming standard methods across use cases that include very crowded scenes and scenes with scale variation, i.e., images that contain both large and small objects. Active research and development may bring these models further into the mainstream.

Because instance segmentation is so resource- and training-intensive, not every application is going to be the right fit. That being said, some of the most futuristic and safety-critical projects require fast and accurate image segmentation models.

In every one of these applications, using the right model is just the beginning of the process. Precise, high-quality data annotation will ensure your model performs well across all applications, improving safety, efficiency, and user satisfaction.

One big challenge for instance segmentation is the process of distinguishing objects from each other when they partially overlap. Have you ever zoomed into a photo so far that you can see the pixels? Do you find yourself unable to tell whether you’re looking at part of someone’s hand or part of the object they’re holding? Believe it or not, image segmentation models face the same difficulty. That’s because the model has to infer boundaries from limited pixel information, especially when parts of an object are hidden, or only a small portion is visible.

Similarly, very small objects can also cause difficulty for instance segmentation models, especially in the context of a much larger image. Let’s say your algorithm is looking for an object that only takes up a few dozen pixels in an image that comprises a few million pixels. The target may not contain enough information for the model to make a good guess.

You can address some of these challenges by adopting a more consistent data annotation schema. However, data annotation for instance segmentation can be a challenge all of its own. Imagine going frame by frame through a video with a few thousand frames and drawing a vector around the same object each time. Techniques such as frame sampling can reduce this workload, but it has the potential to reduce accuracy if applied incorrectly.

Lastly, there is your use case to consider. Do you need real-time image recognition for your application? If so, you’ll need to be sure to select the correct model and invest in some hefty infrastructure to support those needs. The more accuracy you need, and the faster you need it, the more difficulty you’ll have growing and scaling.

These challenges aren’t insurmountable. Image annotation support services can address these challenges with high-quality processes and guidance borne of long experience.

There are ways to right-size models for any application, increase the efficiency of data annotation, and optimize model performance to compensate for their potential weaknesses.

Instance segmentation asks an AI model to:

What’s more, instance segmentation asks a computer to perform this task without any breaks or lapses in focus, something that would be unrealistic to expect from a human observer.

Building a reliable instance segmentation system requires thoughtful model selection, careful data preparation, and the right training strategy. It requires not just technical mastery but also practical planning ability. The right model architecture must match the specific use case, and it must incorporate the right data, prepared correctly.

At Sama, we offer data annotation support services that can help your model excel. We can save much of the labor involved with selecting the right model, annotating the required training data, and upholding the standards of quality necessary to succeed.

With dozens of customers, including 40% of FAANG companies, we’re trusted by industry leaders to enable their mission-critical AI solutions. If you’re ready to inaugurate an instance segmentation project, talk to a Sama expert to learn how we can help you improve your annotation quality and optimize your workflow.