The purpose of a LiDAR annotation quality rubric is simple: it ensures that two people will score the same object in identical ways.

The key to accurate automotive annotation is consistency. A team of seasoned annotators equipped with cutting-edge automation tools is a great start, but it isn't enough for a smooth, efficient development process.A good quality rubric is an essential part of the development process, and this post will answer some of the most common questions about rubric creation and uses.

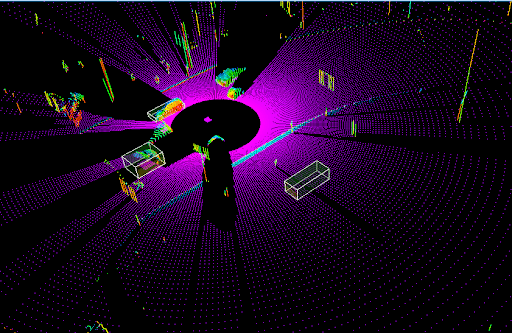

Develop grading consistency early with quality assessment tools.The purpose of a LiDAR annotation quality rubric is simple: it ensures that two people will score the same object in identical ways. But when should your team begin using a rubric? Very early on. Even during the exploratory research phase, a rubric is a strong tool for gaining insight into future model requirements, edge cases, and more. One of the key parts of a good rubric is that it develops with your needs. Creating and utilizing a rubric early in your development cycle is not setting the rubric metrics in stone, it is the beginning of the evolution.Before you get started creating a quality rubric, you first need to define the expected limits of your systems. Consider how accurate your sensor data is to determine how fine-grained your annotations must be to truly take advantage of your model. Some of the topics that will determine this are localization accuracy, camera calibration, system performance, and time to crash (TTC) in worst-case scenarios.Once you’ve defined your expected limits, you can start defining the quality scoring of your rubric.

Make the intention of your rubric clear to annotators.Like so much in data annotation, quality definition is about finding a balance between accuracy and time. Consistent scoring methods should capture both common attributes — the attributes your model requires — and edge cases that show up rarely but may impact the scoring of an analyzed file. The annotation instructions should be clear but still cover a lot of ground. Again, the goal is for any two people to score the same object in identical ways.Getting useful results quickly is the goal here. A great tool for bolstering efficiency is a set of visual examples for annotators. Behind the scenes, you know what you want your rubric to do, but annotators may not. Visual aids help communicate the desired end result of your rubric to annotators, cutting down on confusion and improving efficient labeling.

Make sure to lay out the kinds of errors annotators should be looking for. Missed objects and points, inconsistent object tracking and direction of travel recognition, cuboid jittering, and incorrect cuboid size are all error types commonly found in quality rubrics. Defining how these different errors impact the overall score is also an important consideration that, like the rubric itself, should evolve with your needs. This is a key part of defining your quality rubric: critical errors that your model should never make must have harsh corresponding scores. Less critical errors can have “softer” penalties.

Examples of some items that might appear in your quality rubric.CRITICAL OR NON-CRITICAL?ERROR TYPEDEFINITIONTASK PENALTYCriticalOmissionFalse negative. Cases where objects to be annotated are left out.30% - 100%CriticalIncorrect GroupingCases where annotation has been grouped incorrectly30% - 100%Non CriticalInaccurate Annotation - Too TightCases where part of the object is left out of the tagged area in accordance with the geometric accuracy tolerance.5% - 25%

Gold Tasks — tasks that have been annotated “perfectly” or meet the “gold standard” — provide annotation benchmarks for improving accuracy, and allow annotators to practice to increase their efficiency. They can be used to align on expectations around precision and quality, and as examples during training.Gold Tasks can speed up the annotation process while also helping to further develop your rubric by providing a look at how your rubric is currently performing.This will save time down the road by preventing large-scale reassessments of rubric efficacy, and force you to consider important questions about your scoring methods. Can you create a perfect task manually? Does the Gold Task offer an accurate representation of the real data your annotators will be working with? Answering these questions is another way to assess your quality rubric and boost its overall usefulness.

A quality rubric should change to fit your current needs.Larger rubric attributes and error lists mean more time and higher accuracy, but your model might not need that level of annotation. Since a quality rubric should mature in tandem with your development cycle, what the perfect balance between time and quality looks like will shift.In early development stages, small data sets are usually all that is needed. Your rubric should be well defined, but less strict in its penalties. If you start with a rubric that defines a smaller range of use cases, you will also have fewer edge cases (for example, annotating a highway versus annotating a road in an urban area; the former will have less complexity than the latter, and will require a simpler rubric).Similarly, later stages in the development process will likely see the use of large data sets, more complex scenarios, and comprehensive, well-tuned quality rubrics. This is the time for nitpicking on large amounts of data.Whatever stage of development your organization is in, your rubric should match your current ontology size and label accuracy needs to maintain efficiency. When you have tighter annotations, it will take your annotators more time to deliver, and with that costs will increase. There is one problem with later development stages: internal annotation might not be enough for the large data sets and complex annotation rules you've developed.

Experienced labeling partners are essential for complex use cases.There is one last consideration: who should annotate your data? To begin with your first ground truth iterations, internal annotators are often the way to go. This allows labeling data to be generated and rubric iteration to happen as fast as possible while initial research and model development are also being done.Down the line, however, third-party annotation will be key to your success. Groups that specialize in annotation can offer high-quality annotation to meet your needs and even assist in getting to your rubric to the next level. Internal testing is sufficient up until you surpass simple drive testing. Complex driving annotation requires more experience than internal annotators likely have, and this more involved testing also presents difficult to define edge cases. While it may increase cost in the short term, bringing in outside experts will likely increase the overall efficacy of your model training.Sama provides the kind of expertise needed for the mid to late stages of development. Operating since 2008, we have the experience and advanced tools to give our clients high-quality annotations and labeling analysis. We offer high-quality SLAs and comprehensive QA practices, utilize ethical AI and automated quality checks, and can work at scale. Sama can help you get the most out of your models by pinpointing easy-to-miss edge cases, providing fast and accurate labeling.

Lorem ipsum dolot amet sit connsectitur